It has two robotic hands along with arms which will work as a sign language interpreter. Sign language interpreter helps people to communicate with the people who are born deaf or have hearing loss. Over 5% of the world's population or 430 million people are facing hearing loss (432 million adults and 34 million children). It is estimated that by 2050 over 700 million people (one in every ten people) will have disabling hearing loss. So, it will be necessary to find ways to communicate with such people. Our sign language interpreter will be able to perform maximum 40-50 signs which includes words and greetings (i.e. hello, goodbye) on the basis of input given by mobile application. By this we can make communication between a normal person and a deaf person easier. For instance, a person wants to communicate with a person who can’t hear. And the person also don’t know the sign language. In that case, he/she can simply give its input to the mobile application and the robotic arm will communicate his/her message to the deaf by performing the sign language.

Project Proposal

1. High-level project introduction and performance expectation

Over 5% of the world's population or 430 million people are facing hearing loss (432 million adults and 34 million children). It is estimated that by 2050 over 700 million people (one in every ten people) will have disabling hearing loss. So, it will be necessary to find ways to communicate with such people. Sign Language interpreter is a robot which consist of two forearms along with hands. It makes communication easier between a normal person and a deaf person by performing sign language translation. Sign language interpreter helps people to communicate with the people who are born deaf or have hearing loss. Our sign language interpreter will be able to perform approximately 40-50 signs which includes words and greetings (i.e. hello, goodbye) on the basis of input given by mobile application. By this we can make communication between a normal person and a deaf person easier. For instance, a person wants to communicate with a person who can’t neither speak nor know the sign language. In that case, he/she can simply give its input to the mobile application and the robotic arm will communicate his/her message to the deaf by performing the sign language operations. It will also be able to recognize between voice of different people.

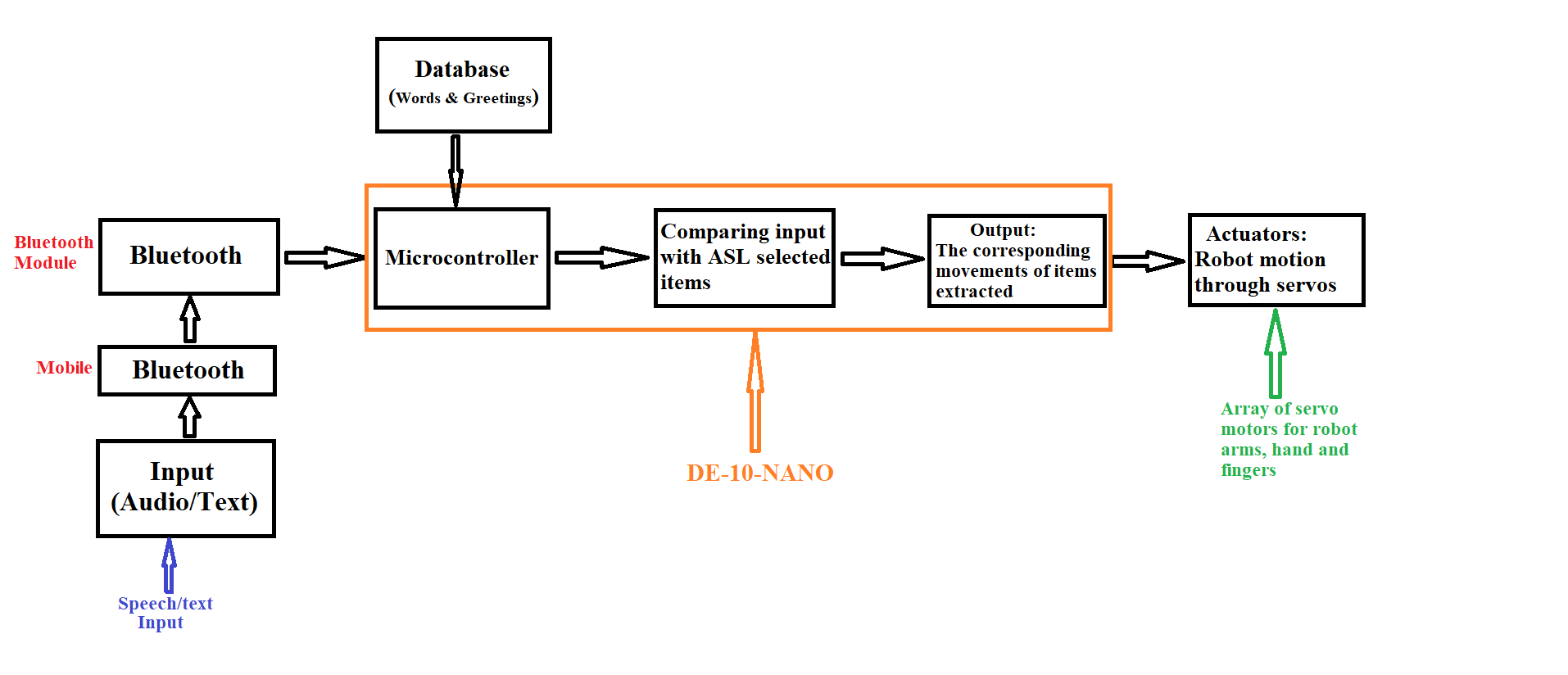

2. Block Diagram

3. Expected sustainability results, projected resource savings

Sign language interpreter is one of the most important medical robot as it provides a lot of convenience to deaf people in every sector of life. It is the fundamental communication need of every deaf institute. Our prototype of sign language interpreter with two arms perform almost all the American Sign Language (ASL), which gives the advantage to mute people all over the world. It is an important robot as it eliminates the need of humanly sign language interpreter in the workplaces, educational institutes of deaf people, hospitals and many other places. One of the most important use of it is in educational institutes for the education of deaf people. Every institutes should have this interpreter as it gives a lot of privilege to the deaf people.

Deaf and dumb people needs human sign language interpreters in every field of life for communication purposes, if they are not available then it cause serious problems for deaf/mute. This problem could be easily sorted out with the help of sign language interpreter robot as it reduces the need of human interpreter. Most of the sectors even don’t have human interpreters for deaf community so the face a lot of difficulty in communication. This is the very serious issue which seeks serious benevolence of the health sector worldwide. The main purpose of our project is to make a sustainable and user friendly device for deaf community.

It is a very sustainable project as it comprises of two 3D arms with internal hardware inside the arms. It estimated weight is 2kg. At initial level we are making two arms structure but in future we will definitely make the full structure of upper body with some innovations so it will give the good presentable look.

4. Design Introduction

Basic design structure of sign language interpreter consists of 3D structure of two arms, Bluetooth module, microcontroller, motor driver, servo motors and stepper motors. Firstly, the input will be given in form of text/audio to Bluetooth. Than it will be transferred to microcontroller. All the processing will be done by the microcontroller. Microcontroller will give the instructions to the motors according to the program that was burnt onto the controller. After receiving the signal from controller, motors will perform accordingly so in this way all the set of predefined words that are programmed in the controller would be performed on user input. All the motors will be synchronized for absolute and precise results. The software used for schematic diagram is Proteus.

5. Functional description and implementation

Sign language is the native language of the deaf community and provide full access to communication. So, the main aim of our project is to communicate with the people who are deaf and hearing impaired. The movement of robotic arm is controlled by the motors (servo and stepper) through these motors the arm will move very precisely and in exact increments. The movement of robotic arm is only for the pre-defined set of words the words which will be programmed on FPGA. Synchronization always play an important role in the multi-motor system especially in every robotic system. Without the synchronization of motors the device will not work in the right manner. It will be a two-armed robot that will translates input commands into the sign language interpretation. It will be constructed from 3-D printed parts. It will be consisted of an array of servo motors that controls the joints, 2 stepper motors to control the position and precision of the arm, 1 motor driver to step up the value of current. First, we will make a connection between a Mobile Bluetooth to the Bluetooth module which will be connected to controller, then we will provide input in the form of text/speech via mobile application to the Bluetooth module it will send it to the board which will compare input with the pre-defined data base. If the instructions exists and matches then the motors will respond in the sequence that will be extracted from the data base, in result performing the sign.

6. Performance metrics, performance to expectation

7. Sustainability results, resource savings achieved

As there are lot of such people that are deaf and hearing impaired. It is impossible for everyone to learn sign language in order to communicate with such people who are deaf. For office work there should be a way by which normal people can communicate with a deaf. Our project sign language interpreter will solve this problem. As normal person can simply give its input to a mobile application, this application will send information to the robotic hand and robotic hand will perform American Sign Language (ASL). In this way the message will be delivered to the mute.

While we usually see robotics applied to industrial or research applications, there are plenty of ways they could help in everyday life as well. For instance, an autonomous guide for blind people, a kitchen bot that helps disabled folks cook. A robot arm that can perform rudimentary sign language. A mute person who needs to appear in court. These are all circumstances where that person needs a sign language interpreter, but where often such an interpreter is not readily available. This is where a low-cost option like this can offer a solution.

Our prototype of sign language Interpreter works by synchronization of 12 servo motors and 4 stepper motors. Servo motors are used for the purpose of speed control. While stepper motors are used for precision and position control.

8. Conclusion

Our prototype of sign language interpreter will be able to perform maximum of American Sign Language. All 16 motors (12 servos and 4 steppers) will be synchronized with minimum delay. The prototype will perform sign language for pre-defined set of words. Accuracy of robotic arm movement with motors will be achieved.

The system which we are going to develop will enable humanoid robots to interact in a medium, which is crucial to provide daily services for deaf persons in future. We are still at initial level, we are developing a prototype that only have hands and forearms. In future we are expecting develop a complete humanoid robot that can perform American Sign Language.

0 Comments

Please login to post a comment.