An oyster farm monitor system to detect when cleaning or repair is needed.

The Oyster Farm Autonomous Monitor project will consist of an anchored buoy system that will continuously monitor an oyster farm under the water using video analytics and other sensor data. The data, processed locally on the edge, will determine when oyster bed cleaning or repairs are due as well as produce alerts in the case of extreme swells or possible theft. Other sensor data such as pH level, water temperature, ambient temperature, and visibility are also added to the AI models to help produce future predictions well in advance of needed cleanings.

Mixing the team member's backgrounds of hardware engineering, marine biology, and consumer product architecture, The Educated Robot team has accepted the GEF SGP Biodiversity proposal of "Mauritius: Improving Livelihoods of Communities - Oyster Farming for Jewelry Making in Rodrigues" to present one solution to help our world. This unique use case is a perfect real world example highlighting the capabilities of edge computing using Intel Edge-centric FPGAs for advanced image processing. Analog Devices plug-in cards are used to gather additional diverse sensor data to be stored and analyzed using Microsoft Azure Cloud Services.

Project Proposal

1. High-level project introduction and performance expectation

This project will consist of an anchored buoy system that will continuously monitor the oyster farm under the water using video analytics and other sensor data. The data, processed locally on the edge, will determine when oyster bed cleaning or repairs are due as well as produce alerts in the case of extreme swells or possible theft. Other sensor data such as pH level, water temperature, ambient temperature, and visibility are also added to the models to help produce future predictions well in advance of needed cleanings.

All data processing occurs on the local buoy system, where large amounts of video and other sensor data can be continuously used for improvement. The resulting minimal output data is stored locally and uploaded periodically to the Azure cloud to be used to produce productivity reports and analyze data across multiple systems. This cloud data can also be mined to compare between different farms and suggest optimization parameters that would help improve individual farm oyster beds. Depending on the farm location, wireless connectivity via WiFi or Bluetooth will communicate to a land based or central sea based terminal to upload the data to the cloud. For less expensive solutions, farmers could boat out near the buoy and collect the data via Bluetooth to their cell phone which would automatically connect to the Azure cloud when they were within cellphone range. Visual data and feedback on the phone would be used to show real-time status, allow for farmer feedback to continuously enhance the model (was it really clean or not), and provide reports and analytics from the cloud.

A solar panel battery provides power. Battery power and sunlight availability can be monitored to determine the optimal frequency of data capture and the hardware can be put into low power mode when not needed. Buoy system health status would also be monitored and alerts sent out as needed. All components, except the submerged camera attached to the anchor line, would reside in a waterproof housing on the water surface acting as a buoy. Each self-contained system will be tethered to the oyster beds with two simple ropes to allow the camera to always have the proper direction and field of view of the oysters.

With a "designed for production" focus and consideration for a low cost and simple to deploy system, we believe that the total price of the deployed solution could be well under $2,500.

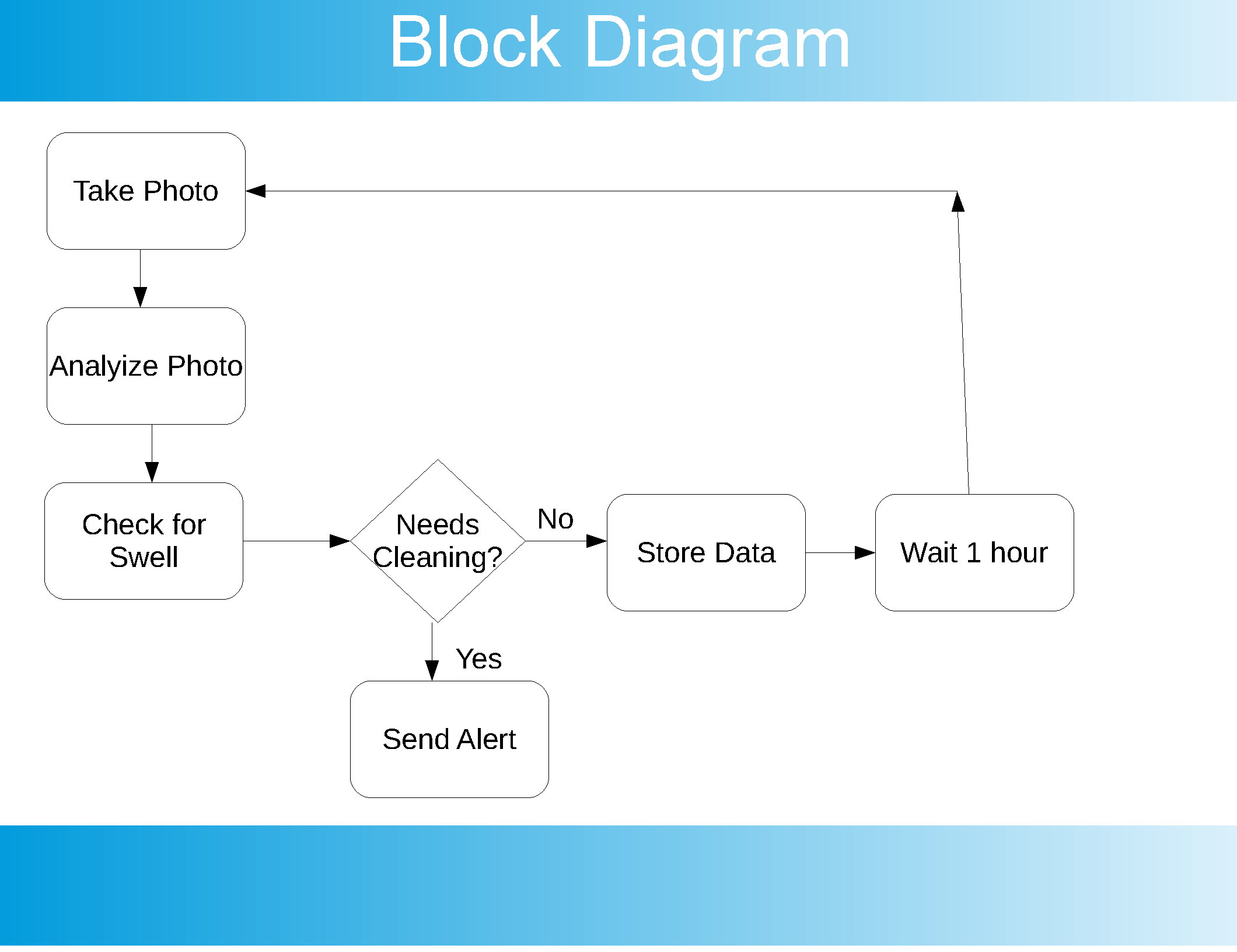

2. Block Diagram

The compute system inside the buoy is designed to grab a photo from the camera at least once an hour and analyze it to determine if the oyster bed needs cleaning. Other sensors including temperature, humidity, swell (motion), are also captured. The photo, analysis, and sensor data are stored locally on the buoy system. If cleaning is needed, an alert is generated and sent, if networking is set up, to the farmer. If no networking is available, the farmer can approach the buoy via boat and collect the data periodically on a phone via Bluetooth connection automatically when in range.

An app on the phone displays the current state and any alerts as well as uploads all of the data to the cloud when the phone is in later cell range. The cloud data is analyzed to predict when the next cleaning will need to be done. It takes all of the captured data into account including the analysis of the photo and all sensor readings over time. The app also allows for immediate feedback from the farmer to indicate that a cleaning is or is not needed at the time based on their viewing of the current and past photos taken. This feedback is incorporated into the analysis engine on the buoy FPGA algorithm as well as the cloud data for better predictive capabilities.

.

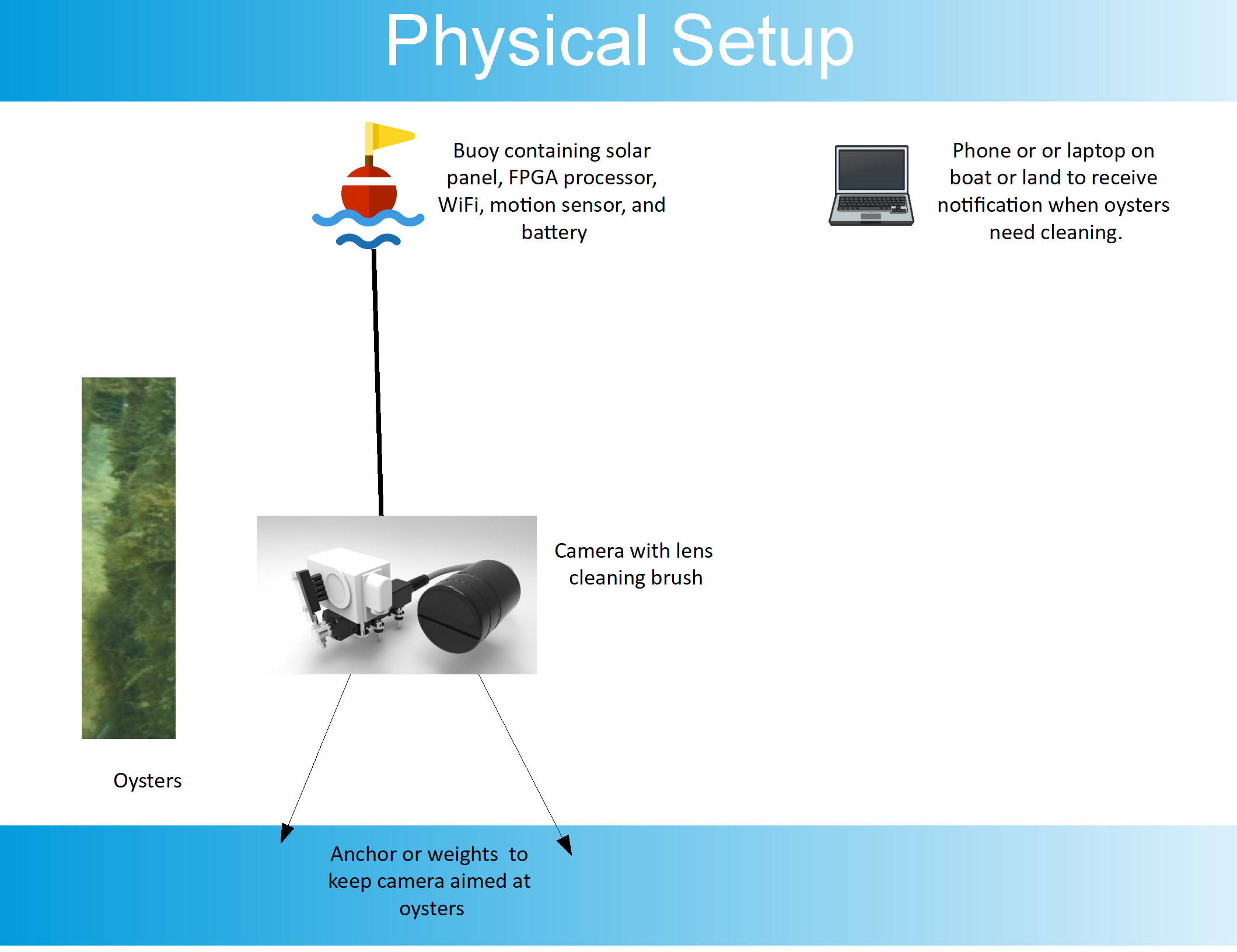

The physical setup is made up of three parts, the buoy compute system, the camera system, and a phone application for viewing the images from a boat or the shore.

The buoy system includes the buoy float and is able to mark the location of the system and also holds the electronics. The electronics are enlosed within a sea worthy water tight container with a clear cover. For initial prototyping this would be an off the shelf balloon buoy and an attached clear waterproof case that are readily available. Electronics in the case would include the solar powered battery (a readily available outdoor rugged solar phone charger would work), the FPGA board (which includes WiFi and sensors attached), and USB cables to connect the two together along with a longer cable to connect to the camera.

The camera system would include a camer in a waterproof camera enclosure with a wiper brush to ensure that the camera view does not get biofouled. At least two anchors or large weights would be used to hold the camera at the right depth and also at the right angle to view the oysters. An exact angle or view is not required, but does have to see the oysters in the camera view.

3. Expected sustainability results, projected resource savings

The proposed system is very simple from a deployment perspective, but in making it simple we do not want to make it less functional. The purpose of the poject is to reduce or eliminate the need for divers to check on the status of the oysters to determine cleaning. Divers would only be called in when cleaning is really needed.

The project solves the problem by two methods:

1) By using real-time analysis of the state of the oyster beds from computer vision analysis of photos taken below the surface on at least an hourly basis. Photos are analyzed by FPGA algorithms on site near the camera and produce notification when cleaning is needed.

2) By using predictive analysis based on previous data that determines when a cleaning would most likely be needed. Data that is uploaded periodically either via network or farmers phone apps near the buoy are incorporated into models in the cloud. Data includes not only the photos and analyzed photo data but also temperature, humidity, and swell/motion sensor data.

Additional feedback from the farmer, a simple "Needs cleaning" button on the phone app, while looking at the photos (current and past) will help improve the models overtime to the point where predictive analysis will allow the farmers to visit the buoys at very infrequent intervals to collect data.

The FPGA board was chosen to easily take advantage of open source AI modeling and computer vision processing. As a fully functional compute system it has all of the processing power of a desktop computer but in an embedded format, allowing for low power consumption, long term usage, as well as allowing for sensors and camera to be easily interfaced. The additional daughter boards will be taken advantage of by allowing additional sensor data to be incorporated into the AI prediction models.

The system is designed initially to only require parts that are readily available for a reasonable cost. This will keep the cost down and allow for a quick deployment for testing. The most expensive part of the system will be the wiper system on the camera to keep the lens free of biofouling. As the project matures, additional focus will be on replacing components with parts that will be able to be used for longer periods of time without replacement. An open sea environment is very hard on equipment and electronics.

We believe that the proposed system will be able to improve the sustainability of the small oyster farming culture and reduce resource needs. By focussing on both real-time feedback for notification when cleaning needs to occur as well as using the resulting data to predict when future cleanings will be needed, this system produces the best results for the farmer.

4. Design Introduction

5. Functional description and implementation

6. Performance metrics, performance to expectation

7. Sustainability results, resource savings achieved

8. Conclusion

1 Comments

Please login to post a comment.

Kirstin Kita

This is great to see that you are creating a solution to one of the SGP projects!

I cannot wait to see the full write-up.

Don't forget to reach out to the contest contacts if there is any information or sample data you need to further complete your design.