The aim of this project is to reduce fuel consumption in the waste collection process, with smart garbage containers. Smart garbage containers will send the waste bin fill-level information to the cloud, and the garbage collection vehicles will reschedule and optimize the waste collection route. In this way, garbage collection vehicles will not enter the areas where unfilled containers are located, so unnecessary fuel consumption will be prevented. Smart garbage container also detects the temperature and gas emissions that are above normal by its sensors and send a warning so that threatening garbage can explosions can be detected and prevented in advance. The fill-level of the smart garbage containers is detected by the distance sensors in the garbage container. The sensor measures the distance between the cover and the wastes. When the measured distance falls below a certain level, it sends the information to the cloud that it is full. Volatile Organic Compound Gas detector will be used to measure methane gas emission, and a thermocouple system will be used to measure temperature.

Project Proposal

1. High-level project introduction and performance expectation

Turkey: Smart Garbage Containers to Reduce Fuel Consumption Caused by The Frequency of Solid Waste Collection

Team Members: Cansu YILMAZ, Çağla Su TAŞDEMİR

The aim of this project is to reduce fuel consumption in the waste collection process, with smart garbage containers. Smart Garbage Containers provide a chance to detect full garbage containers and rearrange an optimized route. This indirectly causes a reduction in human labor as well. With this optimized route, we aim to reduce fuel consumption and therefore CO2 emissions by shortening the path traveled by garbage collection vehicles. The study conducted in Turkey in 2017, shows that one garbage collection vehicle consumes an average of 144 L of fuel per day and 796,468.33 TL is spent on fuel in a year. [1] In Turkey, the collection of waste is done or outsourced by the district municipalities. Therefore, the target users of this project are Municipalities. Collection and transportation of waste constitute 80% of the cost of waste management. With this system, municipalities will both contribute to a sustainable future and reduce the fuel costs of waste collection vehicles.

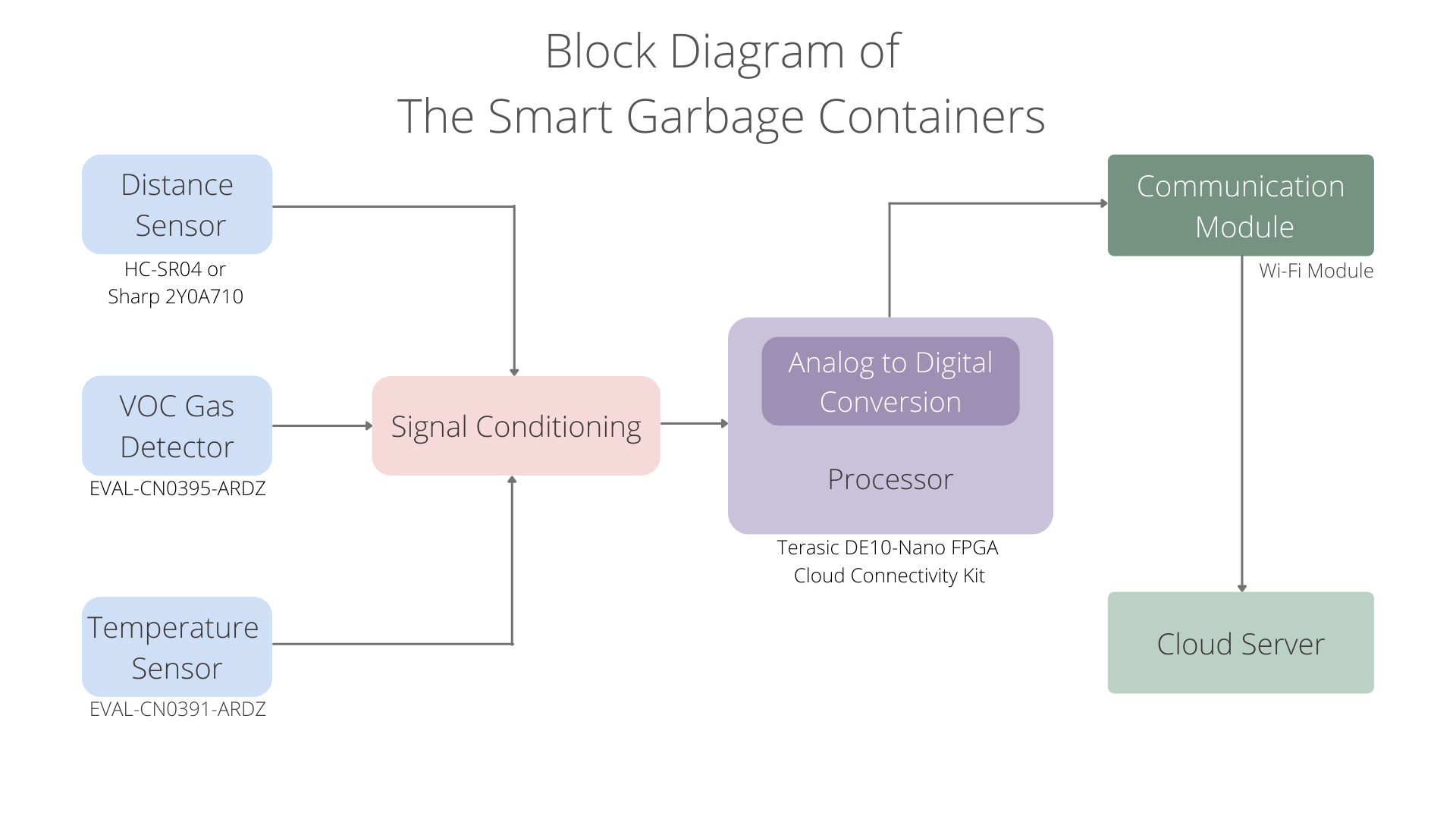

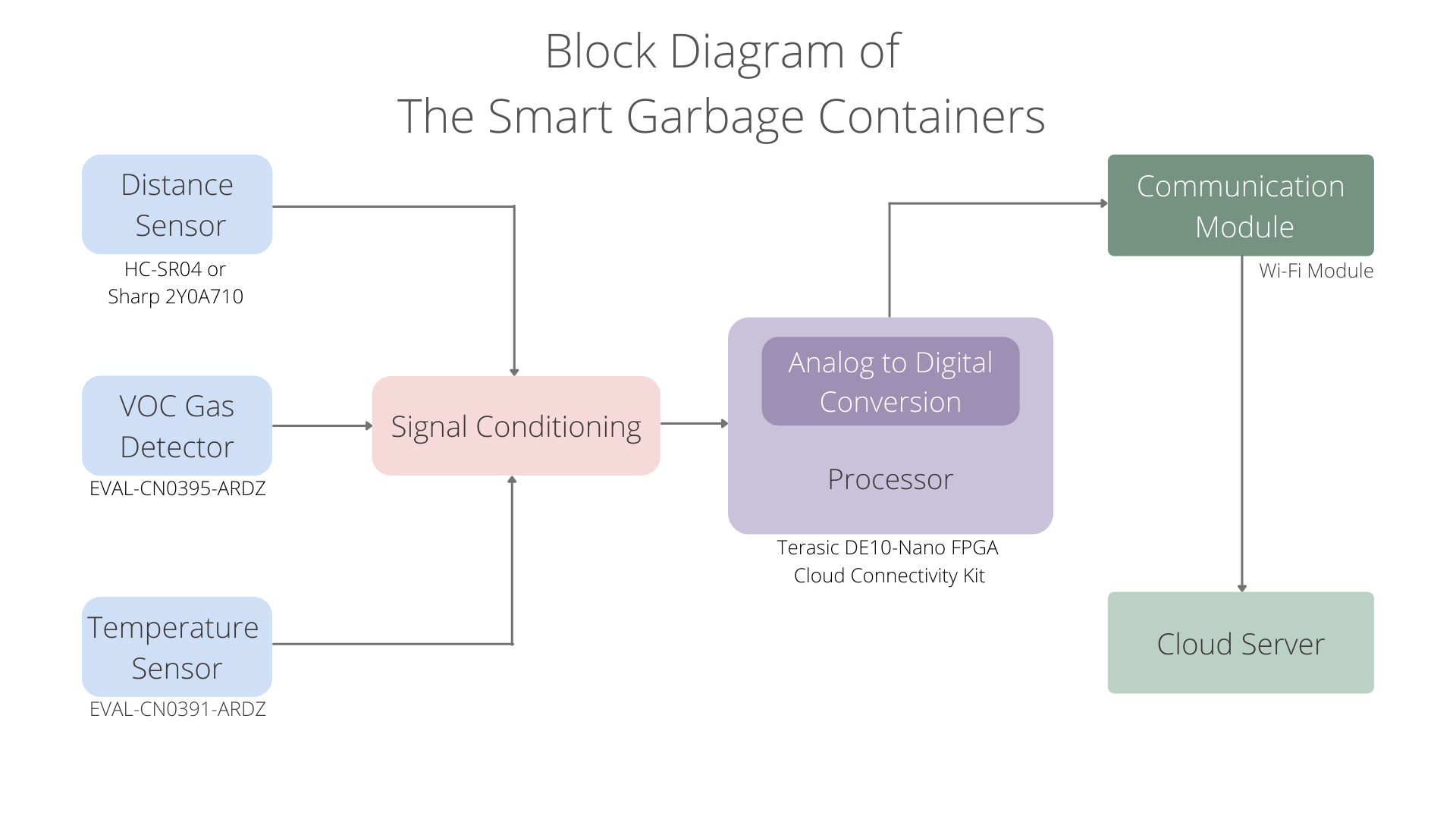

In order to report full garbage containers, a system that measures the waste bin fill-level is needed. Proximity, temperature, weight, pressure, and vibration-based systems are some solution methods for waste bin fill-level problems. However, these systems do not give as high accuracy as the systems in which ultrasonic and IR sensors are used. In our smart garbage container system, the fill-level of the smart garbage containers is detected by the distance sensors in the garbage container. The sensor measures the distance between the cover and the wastes. When the measured distance falls below a certain level, it sends the information to the cloud that it is full. When the waste bin fill-level state is full, the garbage collection vehicles route will be rescheduled and optimized. In this way, garbage collection vehicles will not enter the areas where unfilled containers are located, so unnecessary fuel consumption will be prevented. Smart garbage container also detects the temperature and gas emissions that are above normal by its sensors and send a warning so that threatening garbage can explosions can be detected and prevented in advance. A Volatile Organic Compound Gas Detector will be used to measure methane gas emission, and a thermocouple system will be used to measure temperature.

The reason we want to use Intel FPGA in our project is that its parallel task performance. A general CPU is incapable of parallel processing, while FPGAs are capable of it even at a higher speed. While measuring the bin's fill level, we must constantly check for methane emissions at the same time. In addition to that, the features it has such as programmability and cost efficiency are among the top advantages of FPGAs. FPGAs are reprogrammable so that it provides modification feature after the circuit is designed and implemented, this feature adds adaptability feature to FPGAs. Smart Waste Containers are open for development and may require additional updates. Since the FPGA is reprogrammable, we will not need a new processor, which will not incur any additional cost.

References

[1] Yaman C. (2017). Costs Pertaining To The Collection, Transportation And Disposal Of Domestic Solid Wastes And General City Cleaning In The City Of İstanbul, pp.

[2] Data.tuik.gov.tr. 2021. TÜİK Kurumsal. [online] Available at: https://data.tuik.gov.tr/Bulten/Index? p=Belediye-Atik-Istatistikleri-2018-30666 [Accessed 30 September 2021].

[3] Ritchie, H. and Roser, M., 2021. CO2 and Greenhouse Gas Emissions. [online] Our World in Data. Available at: https://ourworldindata.org/co2/country/turkey [Accessed 30 September 2021].

2. Block Diagram

3. Expected sustainability results, projected resource savings

Expected sustainability results, projected resource savings:

During 8 hours of garbage collection and transportation, a vehicle consumes an average of 28 liters of diesel. 1 liter of diesel emits 2.7 kg of Co2, which means that one garbage collection round releases approximately 75.6 kg of Co2. Assuming that an 8-hour garbage collection and transportation route is reduced to 7 hours, 9.45 kg of Co2 emissions are prevented in one tour. The garbage collection vehicle in only one region emits 3449.25 kg less carbon per year. According to data in 2018, there are 1395 municipalities providing waste collection services in Turkey. [2] Even if we consider that each municipality has only 1 vehicle, this corresponds to a total of 4812 tons less carbon emissions per year. According to statistics, Turkey released 405 million tons of Co2 in 2019. [3] This corresponds to reducing Turkey's carbon emissions by approximately 0.0012%.

References

[1] Yaman C. (2017). Costs Pertaining To The Collection, Transportation And Disposal Of Domestic Solid Wastes And General City Cleaning In The City Of İstanbul, pp.

[2] Data.tuik.gov.tr. 2021. TÜİK Kurumsal. [online] Available at: https://data.tuik.gov.tr/Bulten/Index? p=Belediye-Atik-Istatistikleri-2018-30666 [Accessed 30 September 2021].

[3] Ritchie, H. and Roser, M., 2021. CO2 and Greenhouse Gas Emissions. [online] Our World in Data. Available at: https://ourworldindata.org/co2/country/turkey [Accessed 30 September 2021].

4. Design Introduction

Turkey: Smart Garbage Containers to Reduce Fuel Consumption Caused by The Frequency of Solid Waste Collection

Team Members: Cansu YILMAZ, Çağla Su TAŞDEMİR

The aim of this project is to reduce fuel consumption in the waste collection process, with smart garbage containers. Smart Garbage Containers provide a chance to detect full garbage containers and rearrange an optimized route. This indirectly causes a reduction in human labor as well. With this optimized route, we aim to reduce fuel consumption and therefore CO2 emissions by shortening the path traveled by garbage collection vehicles. The study conducted in Turkey in 2017, shows that one garbage collection vehicle consumes an average of 144 L of fuel per day and 796,468.33 TL is spent on fuel in a year. [1] In Turkey, the collection of waste is done or outsourced by the district municipalities. Therefore, the target users of this project are Municipalities. Collection and transportation of waste constitute 80% of the cost of waste management. With this system, municipalities will both contribute to a sustainable future and reduce the fuel costs of waste collection vehicles.

In order to report full garbage containers, a system that measures the waste bin fill-level is needed. Proximity, temperature, weight, pressure, and vibration-based systems are some solution methods for waste bin fill-level problems. However, these systems do not give as high accuracy as the systems in which ultrasonic and IR sensors are used. In our smart garbage container system, the fill-level of the smart garbage containers is detected by the distance sensors in the garbage container. The sensor measures the distance between the cover and the wastes. When the measured distance falls below a certain level, it sends the information to the cloud that it is full. When the waste bin fill-level state is full, the garbage collection vehicles route will be rescheduled and optimized. In this way, garbage collection vehicles will not enter the areas where unfilled containers are located, so unnecessary fuel consumption will be prevented. Smart garbage container also detects the temperature and gas emissions that are above normal by its sensors and send a warning so that threatening garbage can explosions can be detected and prevented in advance. A Volatile Organic Compound Gas Detector will be used to measure methane gas emission, and a thermocouple system will be used to measure temperature.

The reason we want to use Intel FPGA in our project is that its parallel task performance. A general CPU is incapable of parallel processing, while FPGAs are capable of it even at a higher speed. While measuring the bin's fill level, we must constantly check for methane emissions at the same time. In addition to that, the features it has such as programmability and cost efficiency are among the top advantages of FPGAs. FPGAs are reprogrammable so that it provides modification feature after the circuit is designed and implemented, this feature adds adaptability feature to FPGAs. Smart Waste Containers are open for development and may require additional updates. Since the FPGA is reprogrammable, we will not need a new processor, which will not incur any additional cost.

References

[1] Yaman C. (2017). Costs Pertaining To The Collection, Transportation And Disposal Of Domestic Solid Wastes And General City Cleaning In The City Of İstanbul, pp.

[2] Data.tuik.gov.tr. 2021. TÜİK Kurumsal. [online] Available at: https://data.tuik.gov.tr/Bulten/Index? p=Belediye-Atik-Istatistikleri-2018-30666 [Accessed 30 September 2021].

[3] Ritchie, H. and Roser, M., 2021. CO2 and Greenhouse Gas Emissions. [online] Our World in Data. Available at: https://ourworldindata.org/co2/country/turkey [Accessed 30 September 2021].

5. Functional description and implementation

In order to report full garbage containers, a system that measures the waste bin fill-level is needed. Proximity, temperature, weight, pressure, and vibration-based systems are some solution methods for waste bin fill-level problems. However, these systems do not give as high accuracy as the systems in which ultrasonic and IR sensors are used. In our smart garbage container system, the fill-level of the smart garbage containers is detected by the distance sensors in the garbage container. The sensor measures the distance between the cover and the wastes. When the measured distance falls below a certain level, it sends the information to the cloud that it is full. When the waste bin fill-level state is full, the garbage collection vehicles route will be rescheduled and optimized. In this way, garbage collection vehicles will not enter the areas where unfilled containers are located, so unnecessary fuel consumption will be prevented. Smart garbage container also detects the temperature and gas emissions that are above normal by its sensors and send a warning so that threatening garbage can explosions can be detected and prevented in advance. A Volatile Organic Compound Gas Detector will be used to measure methane gas emission, and a thermocouple system will be used to measure temperature.

6. Performance metrics, performance to expectation

The reason we want to use Intel FPGA in our project is that its parallel task performance. A general CPU is incapable of parallel processing, while FPGAs are capable of it even at a higher speed. While measuring the bin's fill level, we must constantly check for methane emissions at the same time. In addition to that, the features it has such as programmability and cost efficiency are among the top advantages of FPGAs. FPGAs are reprogrammable so that it provides modification feature after the circuit is designed and implemented, this feature adds adaptability feature to FPGAs. Smart Waste Containers are open for development and may require additional updates. Since the FPGA is reprogrammable, we will not need a new processor, which will not incur any additional cost.

7. Sustainability results, resource savings achieved

8. Conclusion

0 Comments

Please login to post a comment.