AP034 » Facial Expression Recognition of Unconstrained faces using Deep Learning

Recognition of emotion can be done through different modalities, such as speech, facial expression, body gesture etc.Emotion recognition through facial expression has been an interesting area in the last few decades.Facial expressions recognition(FER) play an integral part in conveying our thoughts. FER systems are very useful in human-computer interaction, human behaviour analysis, helping autistic children etc. Because FER systems cannot capture the face in the same orientation, size etc. all the time an unconstrained facial expression recognition system is developed on FPGA.The objective of the project work is to design a less complex FER systems using binary auto-encoders(BAE) and binarized neural networks(BNN) as the classifier supporting real time performance without compromising the accuracy.

Introduction

The FER system consists of four major sub modulesThe FER system consists of four major sub module

Proposal of design:

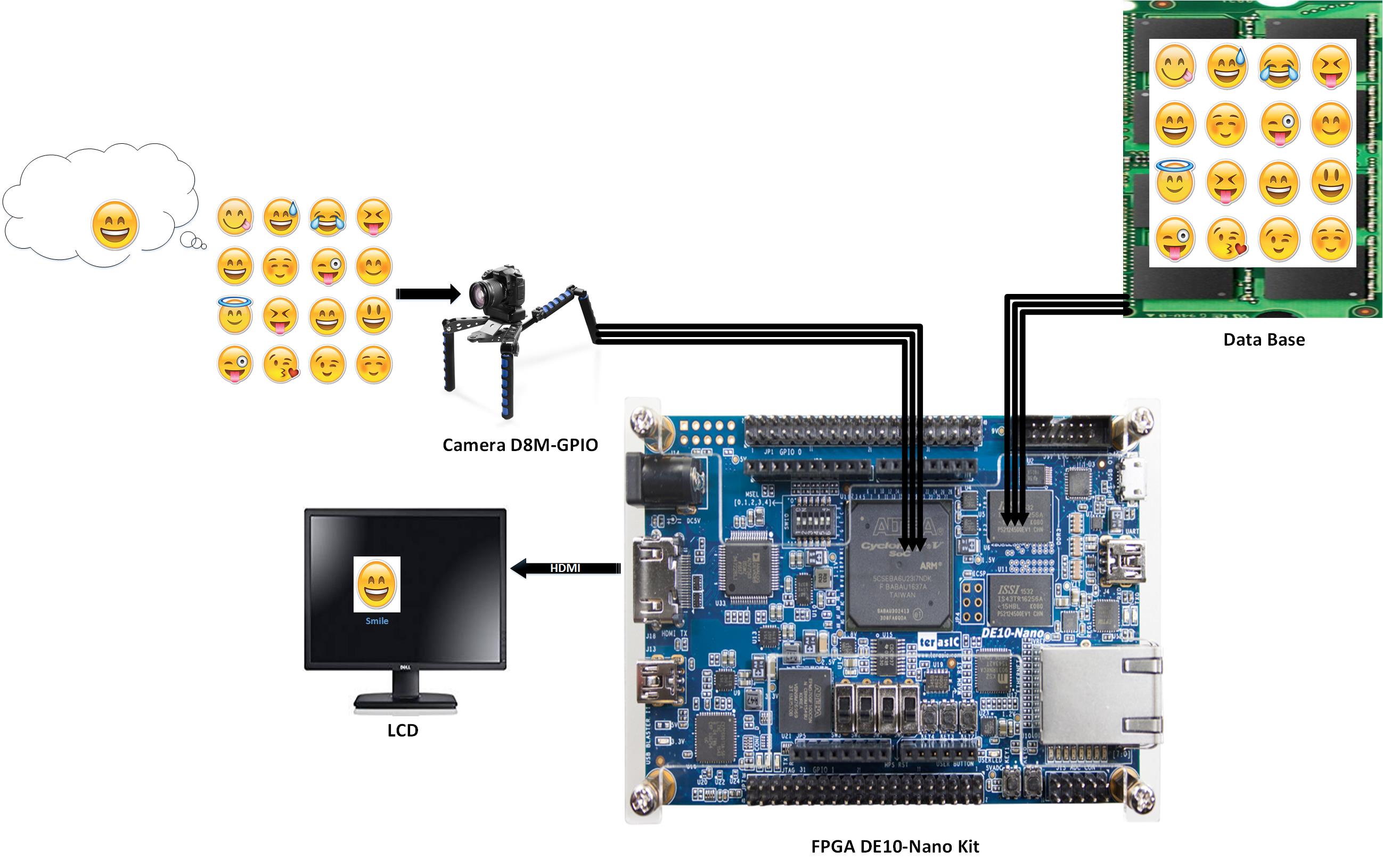

The image/video captured from the camera is stored in the memory. The image is normalised to obtain only the face region. Then the features are extracted using the multi-scale local binary patterns. Then the expression is classified using binary auto encoders and binarized neural network which has been trained using the existing database. Then the classification result is displayed using LCD.

Image/Video capture

The Image/Video capture sub module uses D8M – GPIO camera module to capture the input video. The video frames are captured and using appropriate controllers and buffered using SDRAM for further processing.

Face Detection subsystem

The Face Detection subsystem takes the input image real time from the Image/Video capture module. The region of interest (face) is detected using the standard viola and jones’s Haar classifier algorithm. The project work uses the publicly available hardware implementation of the algorithm to detect the face.

Feature Extraction subsystem

The main objective of the Feature Extraction subsystem is to extract features from the detected face image using algorithm which is computationally efficient without compromising the accuracy. The algorithm that suits our requirement is Multi-scale Dense Local Binary Pattern (MDLBP) with appropriate number of scales. The MDLBP algorithm extract patterns at different resolutions from the input image.

Classifier Subsystem

The extracted features are used to train a Deep Neural Network (DNN) Classifier with reasonable number of images for every emotion. The project uses six emotions like happy, sad, surprise, angry, fear and neutral. The project work uses Binary Neural Network classifier sub module with binary weights and activators as compared to the conventional DNN which lots of memory and MAC unit.

The extracted features are used to train a Binary neural network with many layers (deep) than shallow number of layers. The first layer learns first order features and second layer learns higher order features and so on. The final layer uses supervised learning whereas other layers uses unsupervised learning. The unsupervised learning to supervised learning is carried out using Binary auto Encoders.

1. Image processing algorithms involving parallel processing run about 20 times faster in FPGA than CPU.

2. Since the proposed system uses binarized neural networks the output can be calculated without using several multiplier accumulator. It can be easily realized by XOR or XNOR operation itself.

3. Using the pipelining and parallel processing efficiently will result in efficient FER system that meets the real time requirement.