AP068 » VR|AR Headset with Kalman Filter Position Tracking and Stereo Vision Depth Sensing

Virtual/Augmented reality (VR/AR) is a fascinating way to travel using nothing more than the power of technology. With a headset and motion tracking, VR/AR lets you look around a virtual space as if you are actually there. It is also been a promising technology for decades that never truly caught on.

Position tracking and depth sensing to measure user head motion and surrounding condition are very important towards achieving immersion and presence in virtual reality. In VR/AR, performance is extremely important as every milliseconds counts.

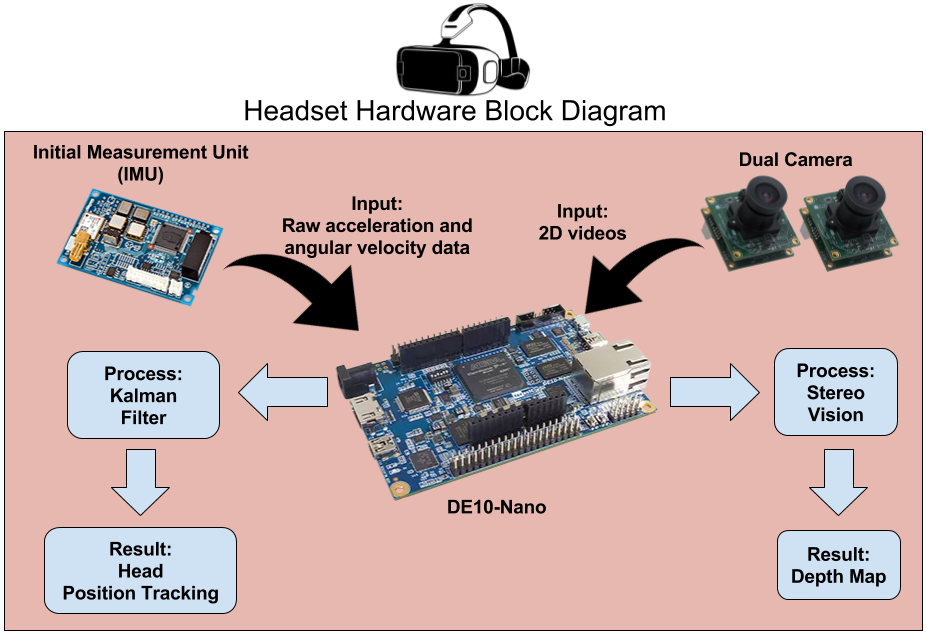

In our project, we are proposing on FPGA based Kalman Filter in analyzing IMU data for position tracking as well as stereo camera for depth sensing. IMU sensor data which consists of noise and interfaces are inaccurate in position tracking and hence require filtering. Kalman filter is an algorithm which requires complex matrix calculation where high-speed arithmetic function implementations and pipelining can be achieve by using FPGA based fully hardware Kalman Filter. Stereo vision depth processing pipeline will be processed in FPGA, outputting dense depth map. Then, object detection and tracking will run on integrated ARM processor based on dense depth map generated by FPGA. Depth sensing on FPGA consume lesser power while having higher performance compared to digital signal processor.

Low power consumption and small package size of FPGA allows realization of battery-powered cordless headset to maximize user experience.

Virtual/Augmented Reality (VR/AR)

VR/AR will step towards mainstream adoption of new technology and change our interfaces to digital devices forever.

Virtual Reality is experienced through head-mounted display(HMD) which display two slightly different video, one for each eye, to mimic real input of human eyes. It require low latency head tracking to decide and render the right view for users.

Augmented Reality is another form of VR which combine virtual and reality, by overlaying graphics onto view of real world. And for this case depth sensing is essential for computer to make sense of the surrounding and augment the graphics on right position of live camera feed.

VR/AR is not only about gaming, VR/AR helps in healthcare organization, educations, industries and etc. It is the new chapter of human computer interaction

Position Tracking

Position tracking plays a vital role in VR/AR to produce immersive virtual environment. Position tracking raw data from IMU unit on headset is fed into FPGA for processing by using Kalman filter algorithm. Kalman filter is an algorithm that uses a series of data collected to estimate a more accurate output by performing some complex matrix computation. Since it is well known with computationally intensive, it may not achieve the strict requirement of real time of VR/AR. Therefore, FPGA comes into play to optimize the computation through parallelism in order to minimize the latency to make the experience as immersive as possible.

Eye Tracking

Eye tracking enables interaction and control of the device naturally by using only eyes. An eye tracker measures eye position and eye movement to sense what a user is looking at. Device with eye tracking allows a more natural way of performing action than with mouse or touchpad. The eye tracker, which consists of IR camera hardware and combining with image processing algorithms interprets the image stream captured by the hardware and calculate the eyes position and movement. FPGA can help to improve the latency and power consumption in the computation of image processing algorithm which can take advantage of the parallelism nature of FPGA.

Stereo-Vision Depth Sensing

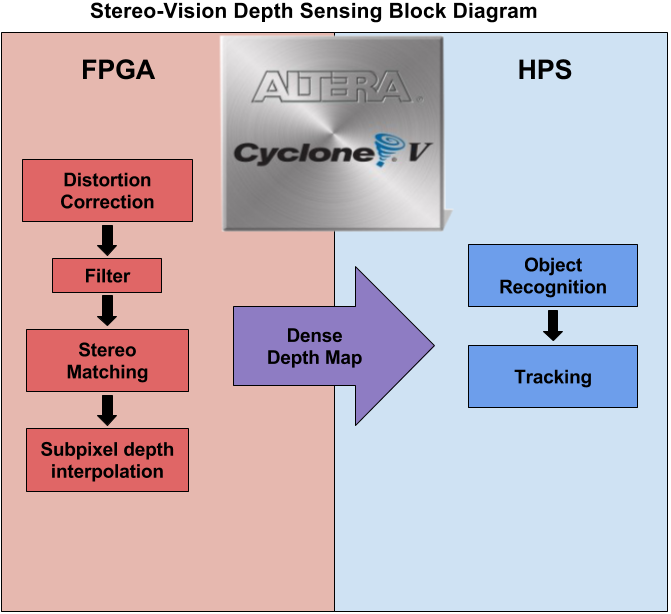

Stereo-vision turn two 2D images into 3D depth map which will substantially improve accuracy of surrounding condition estimation. This is especially important for Augmented Reality where virtual images are superimposed to real world. Dual vision depth sensing require pipeline of processes which are noise filtering, distortion correction, stereo matching, outlier detection, subpixel depth interpolation. The processes can be accelerated by implementing parallel processing in FPGA, allowing real-time generation of high FPS dense depth map. The depth map will then processed by processor for surrounding estimation, object recognition and tracking.

Stereo-Vision processing pipeline will run on FPGA for preprocessing and processor for processing. Camera video stream directly into FPGA for distortion correcting and filtering. Then, the corrected images will be feed into stereo matching and subpixel depth interpolation circuit, converting two corrected 2D images into single 3D depth map. Finally, the depth map will be processed by hard processor system for object recognition and tracking.

By combining the flexibility and high level of logic integration of gate arrays and the benefits of the convenience, ease-of-use and shorter design time, Intel FPGAs have become a mainstream technology for digital system design, especially through the use of FPGAs as reconfigurable computing machines.

Kalman filter is a very complex algorithm where complex matrix computation is required. Intel hardware architecture FPGAs will have advantages in resources usage and throughput compared to conventional implementation of this algorithm. With low latency tolerance for VR/AR in position tracking, a fast processing speed is required where Intel FPGA able to achieve it.

The repetitive operations for stereo vision causes delay, making real-time processing too heavy for CPU. FPGA allows parallel processing generating real-time depth map with high FPS while consuming less power.

Purpose

Virtual reality and augmented reality are predicted to be the important trends in the near future with more and more new applications emerging and VR/AR becoming increasingly present in daily lives. However, the industry is still in the process developing to its full potential and the future of it remains uncertain as there is still a long road to get where VR/AR is fully ready and available.

The biggest challenge with introducing AR/VR to consumers is the complication of building AR platform tools for AR developers and makers. The high barrier of entry on the hardware equipment such as the bulky head mounted display is also a contributory factor.

The project purpose is to implement a lightweight, low power consumption sensor hub to be integrated with the head mounted display to realize a cordless battery head mounted display to maximize user experience

Application scope

VR/AR will change the whole education landscape. It able to provide more immersive learning environment. Its animated content in classroom lessons could catch students’ attention in our dynamic day and age, as well as motivate them to study. Reality technology may make classes more engaging and information more apprehendable. It has an ability to render objects that are hard to imagine and turn them into 3D models, thus making it easier to grasp abstract and difficult content.

Moreover, VR/AR can be implemented in vocational training as well. Its ability to experience training in 360 degree is invaluable and students able to imagine the budding machines viewing working machines from all angles without leaving the lecture room.

VR/AR is paving new digital paves in medical. VR/AR medical simulations able to deliver a tailored learning experience that can be standardized, and can cater to different learning styles in ways that cannot be matched by traditional teaching.

Targeted users

This project do not mainly target end users as the project purpose is to mainly provide a sensor hub integrated on the AR headset and also as a platform for AR developer to read raw data. The main targeted users of this project is the maker crowd, AR developer and also students.

Why FPGA

By using FPGA, a much higher computation speed can be achieved due to the highly parallelizable nature of FPGA and the workload of digital signal processing. A real-time computation can be realizable to improve user experience. More IO in parallel can be employed to reduce the IO bottleneck in IO bound task of reading raw data from various image sensors. Also highly flexible interfacing of FPGA allows it to interface with almost any type of module currently available on the market without much difficulty.

Stereo vision

Stereo vision extracts 3D depth information from digital images capture by two cameras. The two camera are placed horizontally some distance apart from one another. Two different vantage points of a scene is obtained in a manner similar to human binocular vision. By comparing these two images, the depth information can be obtained in the form of a disparity map. The relative depth position of objects can then be calculated when the distance between the two cameras and the focal length of the cameras are known. The steps of stereo vision are as follow:

Then, winner-take-all(WTA) strategy is used to find the disparity with highest aggregated cost. Local matching algorithm is known to be easily affected by the uneven illumination and occlusion. Hence, occluded pixels near object borders will be removed by left-right consistency check.

Disparity Calculation

From the disparity (D) computed, distance will be calculated from the equation

D = u2 - u1

d = distance

b = baseline (distance between optical centres of cameras)

D = disparity

c = optical center

f = focal length

g = epipolar line

Point M is projected as m1 in left image plane, the corresponding point can also be projected as m2 in right image.

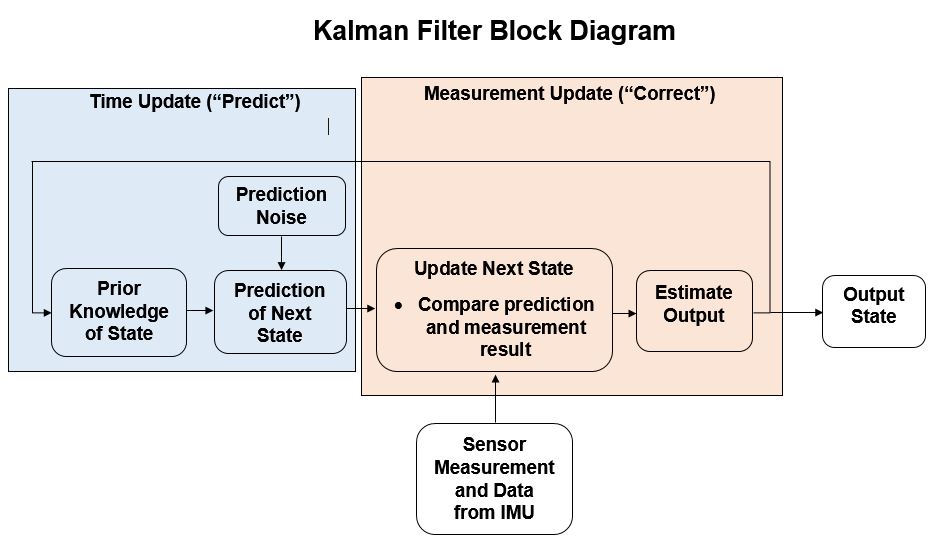

Kalman Filter

A Kalman Filter is a statistical algorithm used to compute an accurate estimate of a signal contaminated by noise. It uses measurements observed over time to reduce noise and produce estimates of the true values, and estimate other parameters related to the signal model. The locator uses multiple input signals which when alone, can each be used to determine location of a device. Depending on different circumstances, some inputs are more contaminated with noise than others. The Kalman Filter uses all the available information from all of the input signals to minimize the noise and determine the best estimate for the location of the device.

The Kalman filter operates by producing a statistically optimal estimate of the system state based upon the measurement(s). To do this it will need to know the noise of the input to the filter called the measurement noise, but also the noise of the system itself called the process noise. To do this the noise has to be Gaussian distributed and have a mean of zero, luckily for us most random noise have this characteristic. Figure below shows complete Kalman FIlter's equation diagram.

It is a powerful and widely applicable system, however it does have some requirements which can make it difficult to implement. It is a recursive filter, which means that any system implementing it requires the inputs to wait for the outputs, which can take a lot of computing time on a traditional sequential computing system. Also, it is not unusual for there to be a large block of inputs to the system. The system is able to model the internal state of large and complex system, therefore one expects there to be a large number of variables involved in the processing. This process may be very slow on a traditional computing system.

By utilizing the FPGA environment, the inherent parallel computation ability of the Kalman filter is exposed and exploited for maximum computing performance. The way to leverage this ability is to take advantage of the many computing resources available on an FPGA. FPGAs come with blocks of hardware resources such as DSP units, embedded memories, complex clocking structures, etc, which can all be used to further the main overall goal: to make the system as fast and seamless as possible. Plus, by utilizing an FPGA system, there is no need to send the hardware description to a foundry to have to produced in silicon. The FPGA can be configured by the end user as needed.

Eye and Gaze tracking

Eye tracking measures the motion of an eye and he point of gaze. An eye tracker webcam is employed in measuring eye positions and eye movement. The eye tracker is not able to provide an absolute gaze direction, but only an approximate changes in gaze direction.

Multi-stage image processing approach is chosen as no additional hardware equipment such as IR LED or IR camera used in a typcal infrared eye tracking system is needed. A multi-stage approach is taken for the centre localisation. A face detector is first applied as the eye is position is always at the upper region of the face, the rough eye region can be extracted. Precise eye center is estimated based on a simple image gradient-based eye center algorithm by Fabian Timm where a simple objective function based on dot product is derived and the centre of the pupil can be found at where the objective function yields a strong maximum. Then, a simple calibration is performed by looking in each direction to calculate the threshold of each gaze direction before using the eye tracker.

The project uses 3 module to compute the desired result cocurrently. The computation time for each module block is measure using software simulation as a baseline and then using FPGA to find the difference in execution time, which is the speedup provided by FPGA.

| Process | Software Implementation |

FPGA Speedup |

| Eye Tracking | 1.6 s |

~ 33.3 ms (30 fps) |

| Stereo Vision | 2 s |

~ 65 ms (15 fps) |

| Kalman Filter Head Tracking | - |

1 us |

FPGA Hardware Design Block Diagram

Eyes Detection Algorithm Flow