Health

Health

Musconnect

AS029 »

Neuromuscular diseases are one of the most common and yet hardest to diagnose anomalies. Most neuromuscular diseases are diagnosed at a very late stage where no cure can help the patient.

But what if this can change? The goal of Musconnect is to monitor almost 100 muscles in a patient simultaneously and in real-time. This mass of data is gathered over a period of time using microelectrodes that are connected to a patient. The electrodes data is transmitted to the FPGA, processed using the MCU and uploaded and stored in Azure. Using data analysis, the device will be able to predict any deterioration in muscle activities far before any doctor or patient can realize.

Moreover, Due to the ability of the device to monitor 100 major muscles across a patient’s body, the diagnostic will be able to pinpoint the exact location of the muscle abnormalities.A doctor can then decide the best treatment option rather than going through several expensive and inaccurate scans.

Since muscle activities are saved on the cloud, a patient/doctor will be able to monitor improvements in the treatments by comparing muscle contractions before and after diagnosis.

This is a major step in the medical field that will help millions of people around the world with a high degree of accuracy.

Other: Monitoring fire in rural areas

Other: Monitoring fire in rural areas

Fangorn

AS031 »

Fangorn project aims to develop a drone surveillance system to detect and monitor outbreaks of forest fires.A camera with an infrared sensor and some sensors (temperature, smoke, pressure) will be used to monitor and capture important data, which will be used in conjunction with computer vision techniques and the power of FPGA multiprocessing to classify whether or not there is a focus of fire in the area.

Health

Health

FPGA IMPLEMENTATION OF DEEP LEARNING MODEL FOR RADIOGRAPHIC EXAMINATION

AP057 »

The healthcare vertical today is patient centric and data driven with the advances in IOT and Artificial Intelligence. A need for early detection and diagnosis for any contiguous diseases or infections is required which is generally performed through radiographic analysis. Deep learning in the field of radiologic image processing reduces false-positive and negative errors in the detection and diagnosis of disease and offers a unique opportunity to provide fast, cheap, and safe diagnostic services to patients.

Deep learning has the potential to augment the use of chest radiography in clinical radiology, but challenges include poor generalizability, spectrum bias , and difficulty comparing across studies. Recently, several clinical applications of CNNs have been proposed and studied in radiology for classification, detection, and segmentation tasks. The Deep learning deterministic model predicts the radiograph into three classes such as Normal, Covid and Viral Pneumonia. The probabilistic model predicts the radiograph into Normal, Cardiomegaly, mass and other abnormalities using Class activation maps(CAM). The main objective is to develop a deep learning–based reconfigurable architecture that can classify normal and abnormal results from chest radiographs with major thoracic diseases including pulmonary malignant neoplasm, active tuberculosis, pneumonia, pneumo-thorax, covid-19 etc, and to validate the performance on Intel FPGA.

Smart City

Smart City

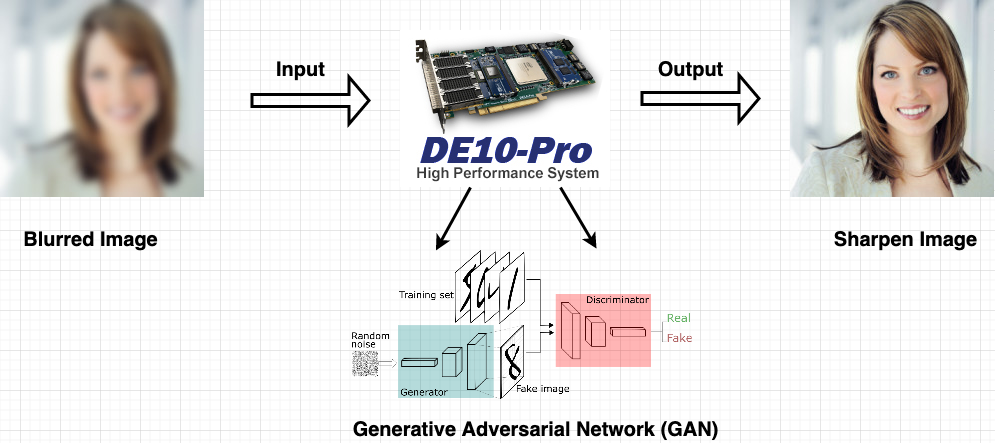

Fast Image Deblurring Reconstruction using Generative Adversarial Networks

AS032 »

Deblurring is the process of removing blurring entities from the image. In recent times, with the advent of machine learning there has been tremendous effort from the research community to come up with new deblurring techniques. However, the state-of-the-art deblurring technique still takes hours of time to construct proper deblurring effect. Therefore, in this project the objective is to construct proper deblurring image instantly. In order to accomplish that we will be using Generative Adversarial Networks (GAN). We have come up with a solution to speedup the GAN training. We will be deploying our solution into the cloud connectivity kit and also make use of Microsoft Azure, in order to generate accelerated deblurring image reconstruction.

Our project will have multiple applications starting from Smart City, Autonomous Vehicles, Industrial etc, as it involves creating proper visible images from blurring entities.

Food Related

Food Related

Soil quality smart monitoring

AS033 »

Making use of Analog Device's EVAL-CN0398-ARDZ board, Intel's cloud connectivity kit, and Azure's IoT services, build a smart station to measure and analize various parameters related to soil quality for smart agriculture. Providing hardware and software solutions for a complete system.

Other: Smart glass for visually impaired

Other: Smart glass for visually impaired

Smart Text Detection Glass

AP067 »

The main aim of our "Smart Text Detection Glass" is to help visually impaired people to read the text. It provides the solution for them to complete even education despite all the difficulties faced by them. Our project is divided into four sections they are Text detection, Text recognition, Text to Speech Conversion and text translation. The task of our glass is to scan any text image and convert it into audio text so the audio can be listened to by the user through headphones. The technologies we are going to use in this project are OCR, Google Text to Speech. The text detection from images is done by using OpenCV and Optical Character Recognition with Tesseract OCR Engine.OpenCV is a real-time computer vision programming functioning library that is used for the digital image or video processing. Optical Character Recognition is the technology in which the Handwritten, typed or printed text into machine-encoded text. The OCR process involves five stages they are preprocessing, image segmentation, feature extraction, image classification and post-processing. We are also using an Efficient and Accurate Scene Text Detector (EAST) which uses Convolutional Neural Network Algorithm. Efficient And Accurate Scene Text Detector is a method and also simple and powerful technology that allows detecting a text in natural scenarios with high accuracy and efficiency. As we are converting the text to audio we are using Text to speech technology by using gTTS library. Now the text is translated to Tamil by using Google Translation Services Library. All the software part is done by using Python compiler. In that case, we are using IDLE with Python 3.9.7. We are embedding this software with hardware by using Raspberry PI Model 3b+. Our Prototype plan includes the glasses with a webcam and headphones. The Raspberry pi is fitted on the user's arm. The Raspberry pi is fitted with a push-button in it. When the user presses the push button the picture is captured in the webcam fitted in the glass frame. The image is then processed and text characters present in the image is extracted and then translated the text to Tamil . Finally the translated text is converted into audio output which will be heard in the headphones. In this work we planning to implement the EAST convolutional neural network algorithm in FPGA hardware as it involves more number of numerical calculations and it is time consuming one if it done in sequential manner. So we prefer FPGA to perform this task.

Food Related

Food Related

Reduction in food wastage

AP071 »

IoT sensors are placed near areas of harvesting and making varieties of dishes that collect data like expiry date and temperature. This data can be sent to the cloud where it can be processed and the decision can be made on either to store the food or distribute it to the needy if the expiry date is approaching very soon.

Health

Health

Sign Language Interpreter

AP072 »

It has two robotic hands along with arms which will work as a sign language interpreter. Sign language interpreter helps people to communicate with the people who are born deaf or have hearing loss. Over 5% of the world's population or 430 million people are facing hearing loss (432 million adults and 34 million children). It is estimated that by 2050 over 700 million people (one in every ten people) will have disabling hearing loss. So, it will be necessary to find ways to communicate with such people. Our sign language interpreter will be able to perform maximum 40-50 signs which includes words and greetings (i.e. hello, goodbye) on the basis of input given by mobile application. By this we can make communication between a normal person and a deaf person easier. For instance, a person wants to communicate with a person who can’t hear. And the person also don’t know the sign language. In that case, he/she can simply give its input to the mobile application and the robotic arm will communicate his/her message to the deaf by performing the sign language.

Smart City

Smart City

Advanced Automatic Number Plate Recognition System

AP075 »

Recognizing the vehicle number plates is a much needed system. This system is already applicable in so many countries but we want to make some advancements in it. So here we are proposing a vehicle number plate recognition system that automatically recognizes vehicle number plates using image processing. We will set a zone or the area, when the vehicle is entered in the parking area the camera automatically detect its number plate. The camera will also detect the type of vehicle, make and model. This will serve as a new benchmark in security systems as well as automatic toll deduction mechanism.

Autonomous Vehicles

Autonomous Vehicles

Adaptive Cruise Control

AP079 »

Our objective is to build an Adaptive cruise control (ACC) System that will help the vehicles to maintain a safe distance and to stay within the speed limit. This system will control the car's speed automatically that will assist the driver and make his involvement in driving the vehicle to minimum.

Other: Sustainable Agriculture

Other: Sustainable Agriculture

SGAIA - Smart Greenhouse Automation using IOT and AI

AP084 »

The proposed greenhouse system is a fully automated system that has all the measures for the functioning of a green house. The greenhouse’s main brain would be the Terasic DE10-Nano FPGA Cloud Connectivity Kit. It would be responsible for all the activities like collecting sensor data, gathering images for analysis of the growth of the plants, operate actuators and also to connect to Azure IOT cloud to send the sensor data. There would be many rows of plants in the greenhouse and each row of plants would be allotted for a specific crop type. The sensor network connected to the main board would be used to collect data like to receive sensing information, including environmental parameters and plant phenotyping data. There would various Analog Devices plug-in cards connected for collected temperature, humidity, pressure, light intensity, gas, soil moisture, pH values. All these values would be recorded using a data logger. The recorded data would be used for processing and post-data analysis. There would be over the head cameras connected to collect information on the growth of the plants using pattern recognition. All the analysis using deep learning will take place on the edge on the DE 10-Nano board. It would result in low communications costs and improved response times. The analysis results would be transmitted to the Azure IOT cloud services so as to make them available for the users through cloud dashboards and smartphone applications. There would be robotic sliding arm connected over the row of plants which would can used for various activities like seeding of the plant seeds, delivering minerals like NPK(Nitrogen-Phosphorus-Potassium) directly to the roots of the plants, analyzing the plants for diseases in them and also for the removal of weeds. The robot would be a gantry system with three degrees of freedom. The end effector of the gantry robot would be attached with types of actuators for uses like watering, nutrients delivering system. The whole farm would be inside a structure made of glass or fiber. The roofs of the greenhouse would be fitted with sun shades which can be operated on the basis of sunlight required. The structure would be fitted with ventilation fans to regulate the flow of air. The structure would help to control factors like excessive sunlight, pests and other external factors that might affect the growth of plants.

Transportation

Transportation

AN ECO-FRIENDLY POTHOLE DETECTION SYSTEM FOR PEER-TO-PEER VEHICULAR COMMUNICATION

EM025 »

Vehicle movement on most African roads could be quite frustrating and unpredictable. This is because these roads are made with substandard materials that would debilitate within a short period causing potholes and broken ways. Lots of lives have been lost in road accidents because road users are unaware of the current condition of the roads ahead. To address the problems associated with bad roads, intelligent traffic management infrastructures such as laser scanners and vibrators seem to be a viable solution to the increasing pressure on road infrastructures. However, these applications are expensive and require institutional intervention to setup on highways. The use of navigation maps have been economical for drivers but their focus has been on routing efficiency rather than safety provision. This project proposes a system that takes cognizance of the current state of road infrastructure by detecting potholes on driven roads and communicating road condition to a pothole database as well as oncoming vehicles up to 2 miles away from the spot, along the same road path.

The proposed system is divided into two modules; a pothole detection module and a vehicular communication module. The pothole detection module uses a conventional camera to capture road condition up to 100 meters away from the moving vehicle and processes these images using a pothole detection model that computes at real time. The model will be mapped to a resource efficient architecture suitable for FPGA implementation and an embedded computing environment. The vehicular communication module uses a data communication model to exchange stacked pothole attributes with bypassing vehicles to communicate the condition of the road ahead. Pothole attributes such as size, location, estimated depth and distance are stacked for the last 2 miles and are communicated in sequence to on coming vehicles. This attribute information is also sent to a remote cloud database that serves as information repository.