Transportation

Transportation

Project Vanguard

AP065 »

We propose to develop an IoT-based device that alerts nearby emergency services and primary contacts when an accident happens. We propose to develop a device using FPGA along with peripherals like an accelerometer, GPS module, and two cameras to alert the officials thereby mitigating the loss.

When a collision occurs the accelerometer sensors detect the drastic malfunction of an accelerometer. As soon as a collision is detected, the recording is stored in memory so the footage can be retrieved and used by the police for further investigation if necessary. Two memory devices will be used to store the recorded video, as soon as 60 minutes are elapsed, the recording continues in another memory device, while the other one gets cleared so that recording shall not be delayed due to the clearing process.

The FPGA along with the sensors comes in a single package(entity) along with two external cameras. The user should plug the device into the battery socket which is made available in every car which will be a power supply for the FPGA device. The user has to connect the cameras with the device and mount the cameras at the apt position which might require a technician to care of the wiring.

On top of this, the data collected from series and video will be relayed to the cloud on which we plan to implement different algorithms to further process data like average speed during the entire course, etc. Also, the video will be further processed using the algorithms on the cloud to determine various aspects which include but are not limited to the severity of accident, possible recognition of number plates.

Disclaimer: The above information describes the basic functioning of the device as a whole. The final product may include extra features and possible optimizations.

Health

Health

Design and Implementation of FPGA Accelerator for Computed Tomography based 3D reconstruction

AP066 »

COMPUTED tomography (CT) is a commonly used methodology that produces 3D images of patients. It allows doctors to non-invasively diagnose various medical problems such as tumors, internal bleeding, and complex fractures. However, high radiation exposure from CT scans raises serious concerns about safety. This has triggered the development of low-dose compressive sensing-based CT algorithms. Instead of traditional algorithms such as the filtered back projection (FBP) , iterative algorithms such as expectation maximization (EM) are used to obtain a quality image with considerably less radiation exposure.

In this proposed work we present a complete and working CT reconstruction system implemented on a server-class node with FPGA coprocessors. It incorporates several FPGA-friendly techniques for acceleration. The contributions of the proposed work includes:

• Ray-driven voxel-tile parallel approach: This approach exploits the computational simplicity of the ray-driven approach, while taking advantage of both ray and voxel data reuse. Both the race condition and the bank conflict problems are completely removed. Also easily increase the degree of parallelism with adjustment of tile shape.

• Variable throughput matching optimization: Strategies to increase the performance for designs that have a variable and disproportionate throughput rate between processing elements. In particular, the logic consumption is reduced by exploiting the low complexity of frequently computed parts of the ray-driven approach. The logic usage is further reduced by using small granularity PEs and module reuse.

• Offline memory analysis for irregular access patterns in the ray-driven approach: To efficiently tile the voxel for irregular memory access, an offline memory analysis technique is proposed. It exploits the input data independence property of the CT machine and also a compact storage format is presented.

• Customized PE architecture:

We present a design that achieves high throughput and logic reuse for each PE.

• Design flow for rapid hardware-software design:

A flow is presented to generate hardware and software for various CT parameters. In this work we adapt the parallelization scheme, offline memory analysis technique, variable throughput optimization, and the automated design flow. Hence the new optimization would be expected to be much faster than earlier model with same dataset.

Other: Smart glass for visually impaired

Other: Smart glass for visually impaired

Smart Text Detection Glass

AP067 »

The main aim of our "Smart Text Detection Glass" is to help visually impaired people to read the text. It provides the solution for them to complete even education despite all the difficulties faced by them. Our project is divided into four sections they are Text detection, Text recognition, Text to Speech Conversion and text translation. The task of our glass is to scan any text image and convert it into audio text so the audio can be listened to by the user through headphones. The technologies we are going to use in this project are OCR, Google Text to Speech. The text detection from images is done by using OpenCV and Optical Character Recognition with Tesseract OCR Engine.OpenCV is a real-time computer vision programming functioning library that is used for the digital image or video processing. Optical Character Recognition is the technology in which the Handwritten, typed or printed text into machine-encoded text. The OCR process involves five stages they are preprocessing, image segmentation, feature extraction, image classification and post-processing. We are also using an Efficient and Accurate Scene Text Detector (EAST) which uses Convolutional Neural Network Algorithm. Efficient And Accurate Scene Text Detector is a method and also simple and powerful technology that allows detecting a text in natural scenarios with high accuracy and efficiency. As we are converting the text to audio we are using Text to speech technology by using gTTS library. Now the text is translated to Tamil by using Google Translation Services Library. All the software part is done by using Python compiler. In that case, we are using IDLE with Python 3.9.7. We are embedding this software with hardware by using Raspberry PI Model 3b+. Our Prototype plan includes the glasses with a webcam and headphones. The Raspberry pi is fitted on the user's arm. The Raspberry pi is fitted with a push-button in it. When the user presses the push button the picture is captured in the webcam fitted in the glass frame. The image is then processed and text characters present in the image is extracted and then translated the text to Tamil . Finally the translated text is converted into audio output which will be heard in the headphones. In this work we planning to implement the EAST convolutional neural network algorithm in FPGA hardware as it involves more number of numerical calculations and it is time consuming one if it done in sequential manner. So we prefer FPGA to perform this task.

Health

Health

DEVELOPMENT OF MULTIPLE MOBILE ROBOTS FOR HEALTHCARE APPLICATIONS USING FPGA

AP068 »

The purpose of our project is to describe the implementation of a “Multiple Mobile Robots” (MMR) that plans and controls the execution of logistics tasks by a set of mobile robots in a real-world hospital environment. The MMR is developed upon an architecture that hosts a routing engine, a supervisor module, controllers and a cloud service. The routing engine handles the geo-referenced data and the calculation of routes; the supervisor module implements algorithms to solve the task allocation problem and the trolley loading problem a temporal estimation of the robot’s positions at any given time hence the robot’s movements are synchronized. Cloud service provides a messaging system to exchange information with the robotic fleet, while the controller implements the control rules to ensure the execution of the work plan on individual robots. The proposed MMR has been developed to have a safe, efficient, and integrated indoor robotic fleets for logistic applications in healthcare and commercial spaces. Moreover, a computational analysis is performed using a virtual hospital floor-plant.

Other: BIODIVERSITY CONSERVATION

Other: BIODIVERSITY CONSERVATION

FFPCAM - Forest Fire Prediction & Conservation by Aerial Monitoring

AP069 »

According to NIFC, in 2018, a total of 8,054 wildfires occurred in California, which led to the burning of 1.8 million acres.

The 2019–2020 bushfire season in Australia caused the burning of over 46 million acres of forest while destroying over 10,000 structures.

The project intends to produce the Fire Forecasting capabilities using Deep Learning and develop further improvements in accuracy, geography, and time scale through the inclusion of additional variables or optimization of model architecture & hyperparameters to conserve and sustain forests for the future.

The data acquired from the sensors is processed by the Intel FPGA by the implemented Deep Learning Algorithm and is sent to the connected Azure-based cloud-server which will store real-time data of the factors and will transmit them to our model. The model will then analyze and produce results to be uploaded back to the cloud.

Food Related

Food Related

Reduction in food wastage

AP071 »

IoT sensors are placed near areas of harvesting and making varieties of dishes that collect data like expiry date and temperature. This data can be sent to the cloud where it can be processed and the decision can be made on either to store the food or distribute it to the needy if the expiry date is approaching very soon.

Health

Health

Sign Language Interpreter

AP072 »

It has two robotic hands along with arms which will work as a sign language interpreter. Sign language interpreter helps people to communicate with the people who are born deaf or have hearing loss. Over 5% of the world's population or 430 million people are facing hearing loss (432 million adults and 34 million children). It is estimated that by 2050 over 700 million people (one in every ten people) will have disabling hearing loss. So, it will be necessary to find ways to communicate with such people. Our sign language interpreter will be able to perform maximum 40-50 signs which includes words and greetings (i.e. hello, goodbye) on the basis of input given by mobile application. By this we can make communication between a normal person and a deaf person easier. For instance, a person wants to communicate with a person who can’t hear. And the person also don’t know the sign language. In that case, he/she can simply give its input to the mobile application and the robotic arm will communicate his/her message to the deaf by performing the sign language.

Health

Health

Gesture Controlled Robotic Gripper Arm through Kinect Sensor

AP073 »

There are many hazardous tasks that human needs to perform and they are dangerous to do by oneself. our product allows the user to control a robotic gripper arm through kinect sensor, which provides better control and more freedom to the user in a real time environment. This allow us humans to complete these risky operations without endangering ourselves. We designed this project to be helpful in both industrial and medical fields. The idea of the project is to design a robotic gripper arm which is controlled through gestures. The robotic arm will be controlled through a Kinect sensor connected to a PC. The robotic structure will mirror the hand and elbow movements of an actual arm as captured by the Kinect sensor.The goal of the project is to create a robot arm that fully replicates the movement of another hand and arm, and can help one remotely grip and lift an object. In this project, real-time interaction among environment, man, and machine is developed through the machine vision system and the robotic arm.

Health

Health

Sign Language Interpreter

AP074 »

It has two robotic hands along with arms which will work as a sign language interpreter. Sign language interpreter helps people to communicate with the people who are born deaf or have hearing loss. Over 5% of the world's population or 430 million people are facing hearing loss (432 million adults and 34 million children). It is estimated that by 2050 over 700 million people (one in every ten people) will have disabling hearing loss. So, it will be necessary to find ways to communicate with such people. Our sign language interpreter will be able to perform maximum 40-50 signs which includes words and greetings (i.e. hello, goodbye) on the basis of input given by mobile application. By this we can make communication between a normal person and a deaf person easier. For instance, a person wants to communicate with a person who can’t hear. And the person also don’t know the sign language. In that case, he/she can simply give its input to the mobile application and the robotic arm will communicate his/her message to the deaf by performing the sign language.

Smart City

Smart City

Advanced Automatic Number Plate Recognition System

AP075 »

Recognizing the vehicle number plates is a much needed system. This system is already applicable in so many countries but we want to make some advancements in it. So here we are proposing a vehicle number plate recognition system that automatically recognizes vehicle number plates using image processing. We will set a zone or the area, when the vehicle is entered in the parking area the camera automatically detect its number plate. The camera will also detect the type of vehicle, make and model. This will serve as a new benchmark in security systems as well as automatic toll deduction mechanism.

Other: Agricultural

Other: Agricultural

IOT based agriculture monitoring systems

AP076 »

Agriculture has been one of the primary occupations of man since early civilizations and even today manual interventions in farming are inevitable. There are many plants that are very sensitive to water levels and required specific level of water supply for proper growth, if this not they may die or results in improper growth. It’s hardly possible that every farmer must possess the perfect knowledge about growing specifications of plants in case of water supply. So, to have a help, an attempt is made by introducing the proposed project named Smart Sensors Based Monitoring System for Agriculture Using FPGA. Water saving, improvement in agricultural yield and automation in agriculture are the objectives of this project. By the use of sensors in project, awareness about changing conditions of moisture, temperature and humidity level will be made available for the farmers so that according to changing conditions of moisture, temperature and humidity farmers will be able to schedule the proper timing for water supply and all the necessary things that required for proper growth of plants. This system can also be used in greenhouses to control important parameters like temperature, soil moisture, humidity etc as per the requirement of proper growth of plants.

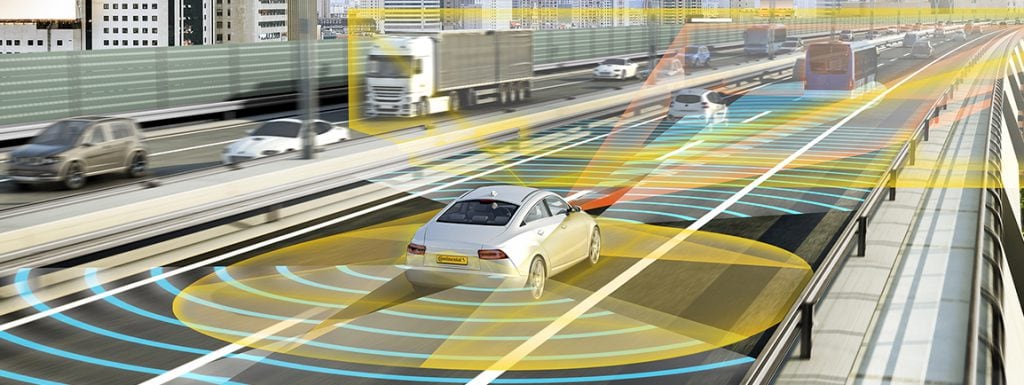

Autonomous Vehicles

Autonomous Vehicles

Image Reconstruction Using FPGA for Adaptive Vehicle Systems

AP077 »